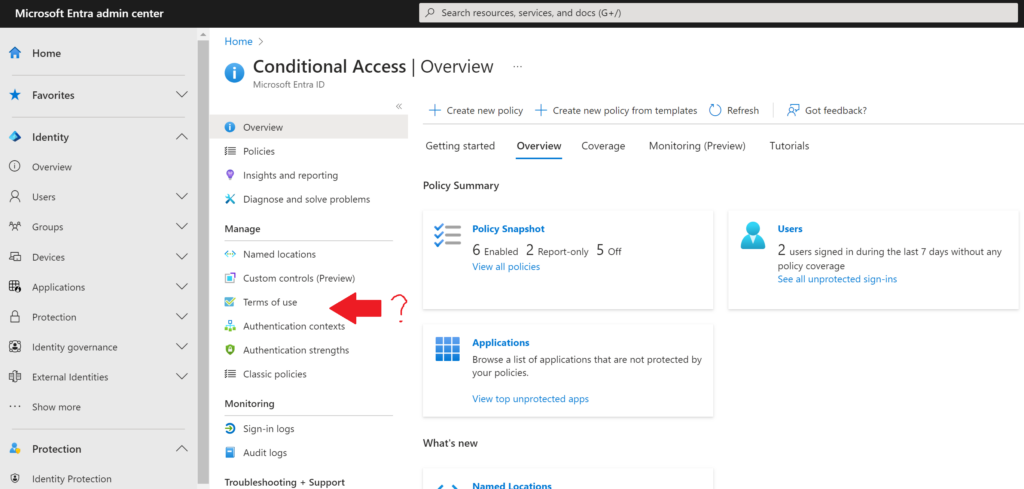

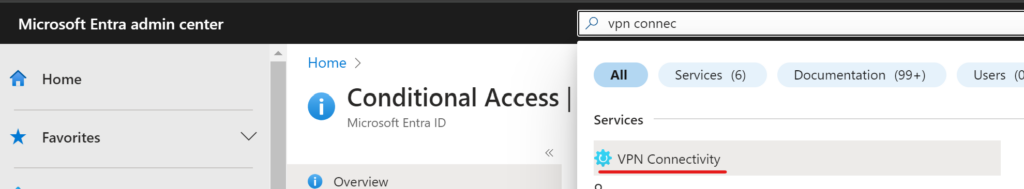

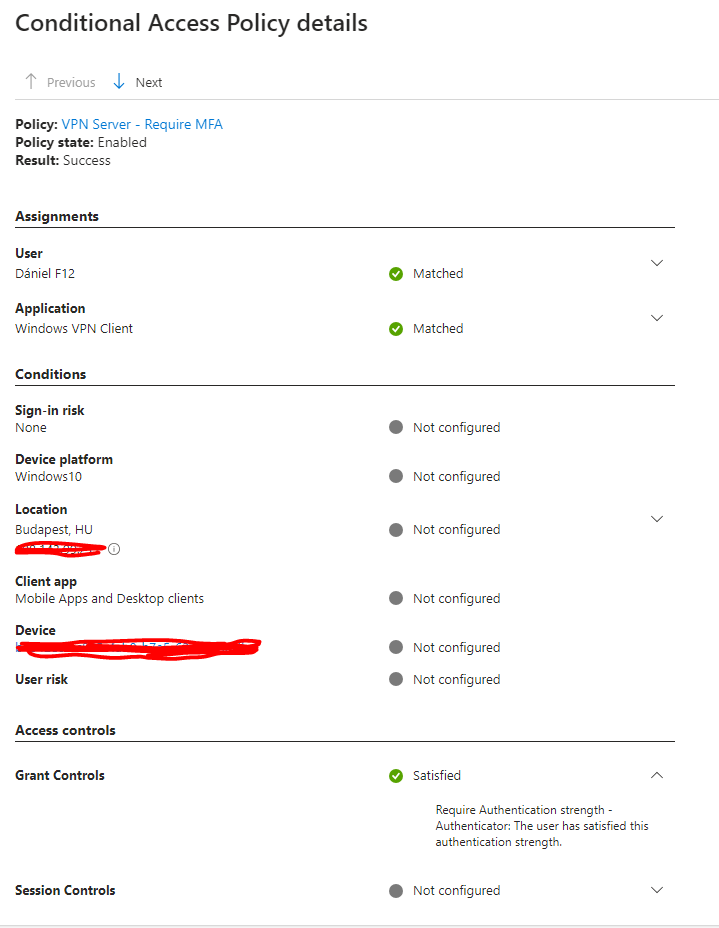

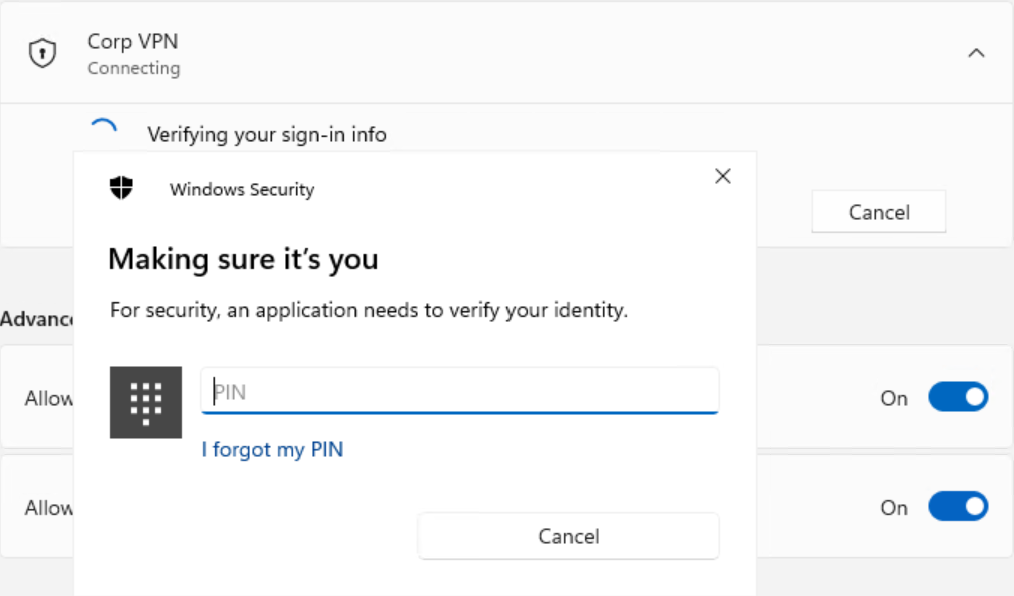

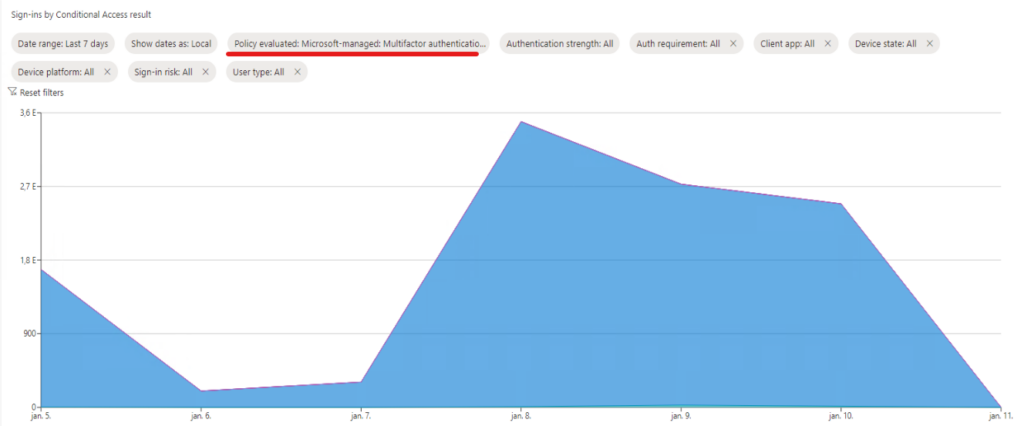

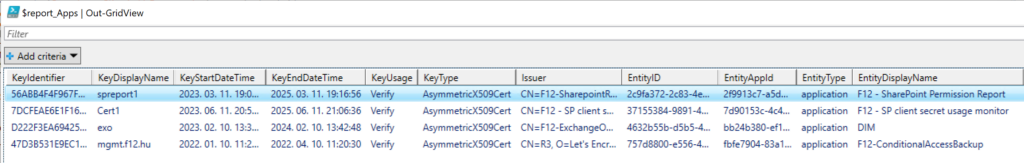

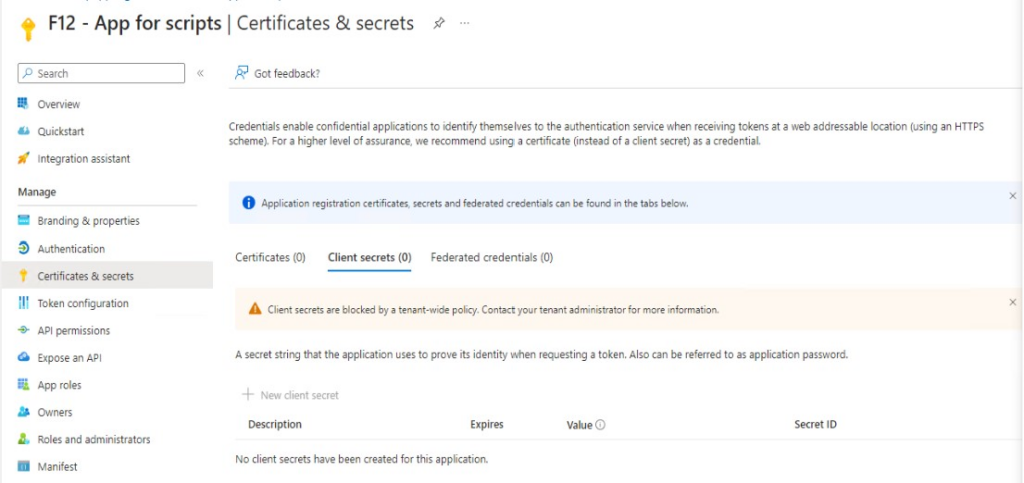

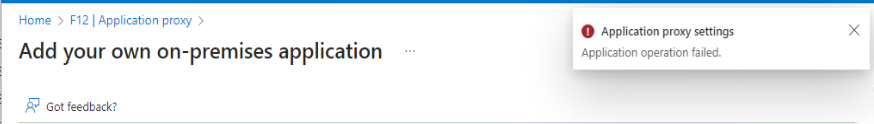

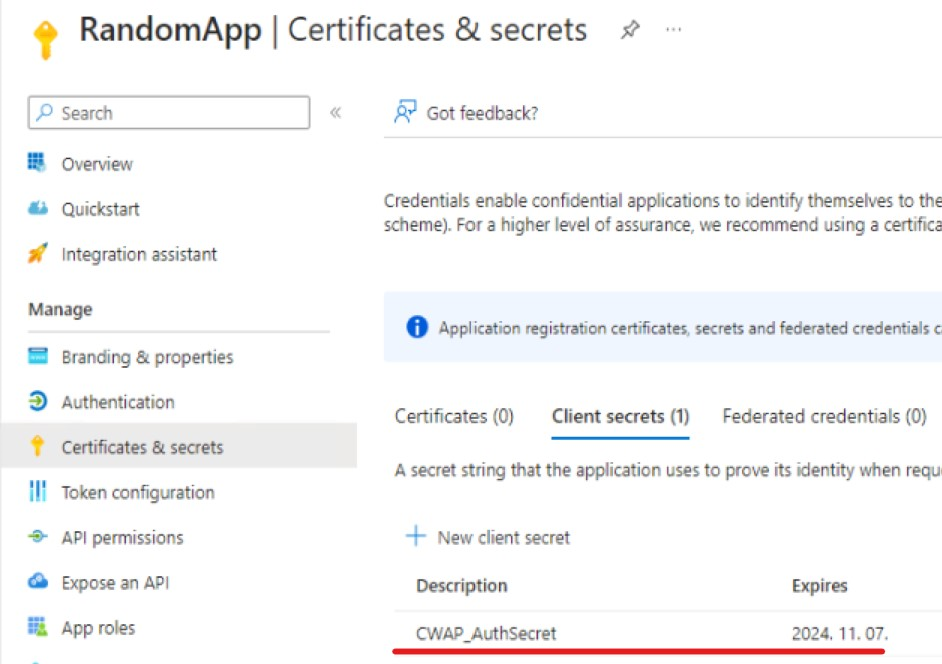

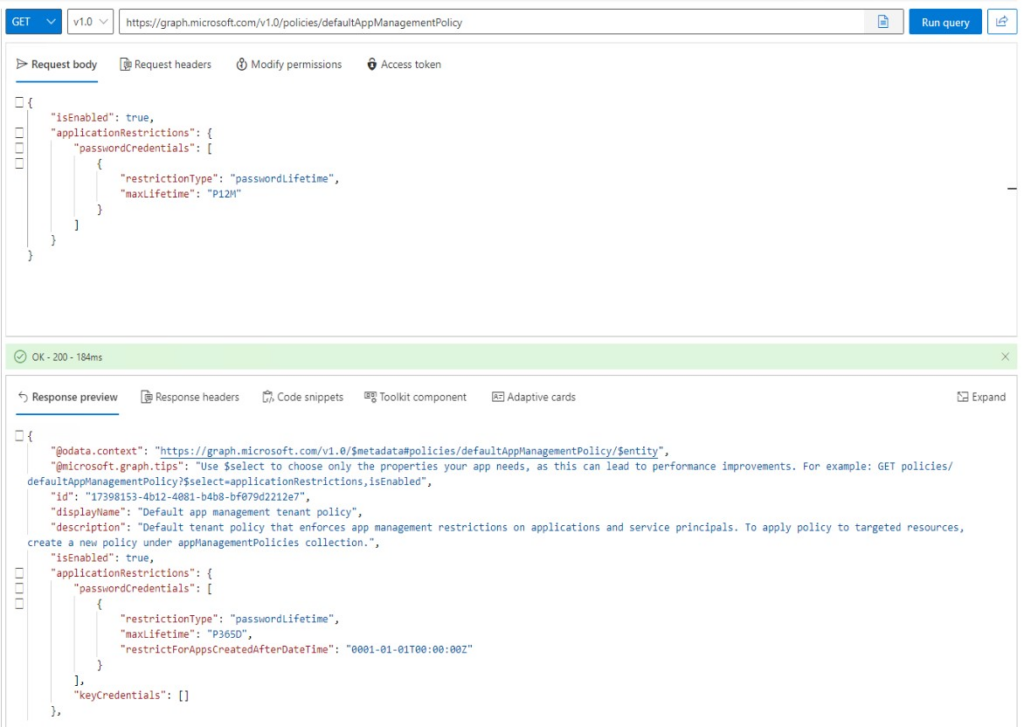

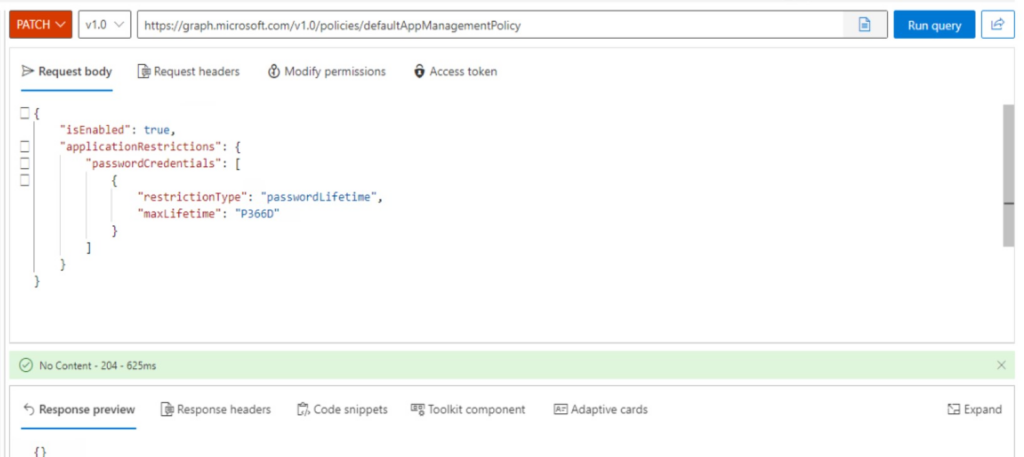

One of my previous posts covered a “basic” way to track secure score changes using Graph API with application permissions. While I still prefer application permissions (over service accounts) for unattended access to certain resources, sometimes it is not possible – for example when you want to access resources which are behind the Defender portal’s apiproxy (like the scoreImpactChangeLogs node in the secureScore report). To overcome this issue, I decided to use Entra Certificate-based Authentication as this method provides a “scriptable” (and “MFA capable”) way to access these resources.

Lot of credit goes to the legendary Dr. Nestori Syynimaa (aka DrAzureAD) and the AADInternals toolkit (referring to the CBA module as this provided me the foundamentals to understand the authentication flow). My script is mostly a stripped version of his work but it targets the security.microsoft.com portal. Credit goes to Marius Solbakken as well for his great blogpost on Azure AD CBA which gave me the hint to fix an error during the authentication flow (details below).

TL;DR

- the script uses certificate-based auth (not to be confused with app auth with certificate) to access https://security.microsoft.com/apiproxy/mtp/secureScore/security/secureScoresV2 which is used to display secure score informations on the Defender portal

- prerequisites: Entra CBA configured for the “service account”, appropriate permissions granted for the account to access secure score informations, certificate to be used for auth

- the script provided is only for research/entertainment purposes, this post is more about the journey and the caveats than the result

- tested on Windows Powershell ( v5.1), encountered issues with Microsoft Powershell (v7.5)

The scipt

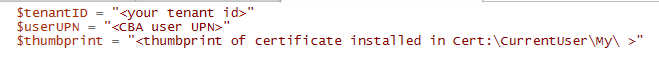

$tenantID = "<your tenant id>"

$userUPN = "<CBA user UPN>"

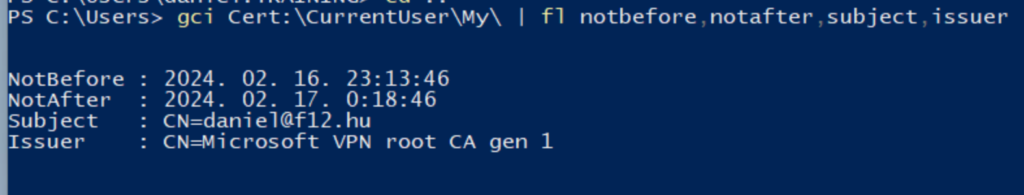

$thumbprint = "<thumbprint of certificate installed in Cert:\CurrentUser\My\ >"

function Extract-Config ($inputstring){

$regex_pattern = '\$Config=.*'

$matches = [regex]::Match($inputstring, $regex_pattern)

$config= $matches.Value.replace("`$Config=","") #remove $Config=

$config = $config.substring(0, $config.length-1) #remove last semicolon

$config | ConvertFrom-Json

}

#https://learn.microsoft.com/en-us/entra/identity/authentication/concept-authentication-web-browser-cookies

##Cert auth to security.microsoft.com

# Credit: https://github.com/Gerenios/AADInternals/blob/master/CBA.ps1

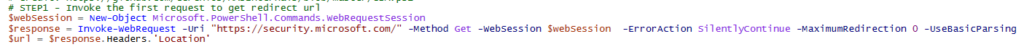

# STEP1 - Invoke the first request to get redirect url

$webSession = New-Object Microsoft.PowerShell.Commands.WebRequestSession

$response = Invoke-WebRequest -Uri "https://security.microsoft.com/" -Method Get -WebSession $webSession -ErrorAction SilentlyContinue -MaximumRedirection 0 -UseBasicParsing

$url = $response.Headers.'Location'

# STEP2 - Send HTTP GET to RedirectUrl

$login_get = Invoke-WebRequest -Uri $Url -Method Get -WebSession $WebSession -ErrorAction SilentlyContinue -UseBasicParsing -MaximumRedirection 0

# STEP3 - Send POST to GetCredentialType endpoint

#Credit: https://goodworkaround.com/2022/02/15/digging-into-azure-ad-certificate-based-authentication/

$GetCredentialType_Body = @{

username = $userUPN

flowtoken = (Extract-Config -inputstring $login_get.Content).sFT

}

$getCredentialType_response = Invoke-RestMethod -method Post -uri "https://login.microsoftonline.com/common/GetCredentialType?mkt=en-US" -ContentType "application/json" -WebSession $webSession -Headers @{"Referer"= $url; "Origin" = "https://login.microsoftonline.com"} -Body ($GetCredentialType_Body | convertto-json -Compress) -UseBasicParsing

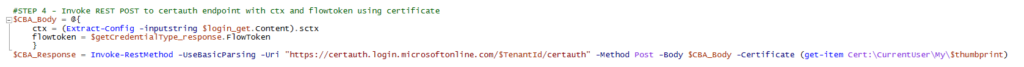

#STEP 4 - Invoke REST POST to certauth endpoint with ctx and flowtoken using certificate

$CBA_Body = @{

ctx = (Extract-Config -inputstring $login_get.Content).sctx

flowtoken = $getCredentialType_response.FlowToken

}

$CBA_Response = Invoke-RestMethod -UseBasicParsing -Uri "https://certauth.login.microsoftonline.com/$TenantId/certauth" -Method Post -Body $CBA_Body -Certificate (get-item Cert:\CurrentUser\My\$thumbprint)

#STEP 5 - Send authentication information to the login endpoint

$login_msolbody = $null

$login_msolbody = @{

login = $userUPN

ctx = ($CBA_Response.html.body.form.input.Where({$_.name -eq "ctx"})).value

flowtoken = ($CBA_Response.html.body.form.input.Where({$_.name -eq "flowtoken"})).value

canary = ($CBA_Response.html.body.form.input.Where({$_.name -eq "canary"})).value

certificatetoken = ($CBA_Response.html.body.form.input.Where({$_.name -eq "certificatetoken"})).value

}

$headersToUse = @{

'Referer'="https://certauth.login.microsoftonline.com/"

'Origin'= "https://certauth.login.microsoftonline.com"

}

$login_postCBA = Invoke-WebRequest -UseBasicParsing -Uri "https://login.microsoftonline.com/common/login" -Method Post -Body $login_msolbody -Headers $headersToUse -WebSession $webSession

#STEP 6 - Make a request to login.microsoftonline.com/kmsi to get code and id_token

$login_postCBA_config = (Extract-Config -inputstring $login_postCBA.Content)

$KMSI_body = @{

"LoginOptions" = "3"

"type" = "28"

"ctx" = $login_postCBA_config.sCtx

"hpgrequestid" = $login_postCBA_config.sessionId

"flowToken" = $login_postCBA_config.sFT

"canary" = $login_postCBA_config.canary

"i19" = "2326"

}

$KMSI_response = Invoke-WebRequest -UseBasicParsing -Uri "https://login.microsoftonline.com/kmsi" -Method Post -WebSession $WebSession -Body $KMSI_body

#STEP 7 - add sessionID cookie to the websession as this will be required to access security.microsoft.com (probably unnecessary)

#$websession.Cookies.Add((New-Object System.Net.Cookie("s.SessID", ($response.BaseResponse.Cookies | ? {$_.name -eq "s.SessID"}).value, "/", "security.microsoft.com"))) #s.SessID cookie is retrived during first GET to defender portal

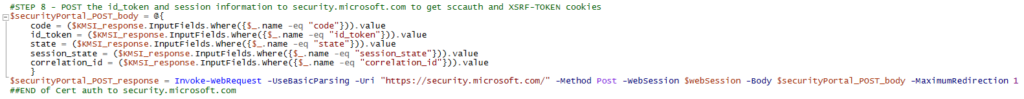

#STEP 8 - POST the id_token and session information to security.microsoft.com to get sccauth and XSRF-TOKEN cookies

$securityPortal_POST_body = @{

code = ($KMSI_response.InputFields.Where({$_.name -eq "code"})).value

id_token = ($KMSI_response.InputFields.Where({$_.name -eq "id_token"})).value

state = ($KMSI_response.InputFields.Where({$_.name -eq "state"})).value

session_state = ($KMSI_response.InputFields.Where({$_.name -eq "session_state"})).value

correlation_id = ($KMSI_response.InputFields.Where({$_.name -eq "correlation_id"})).value

}

$securityPortal_POST_response = Invoke-WebRequest -UseBasicParsing -Uri "https://security.microsoft.com/" -Method Post -WebSession $webSession -Body $securityPortal_POST_body -MaximumRedirection 1

##END of Cert auth to security.microsoft.com

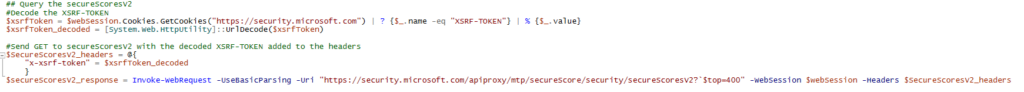

## Query the secureScoresV2

#Decode the XSRF-TOKEN

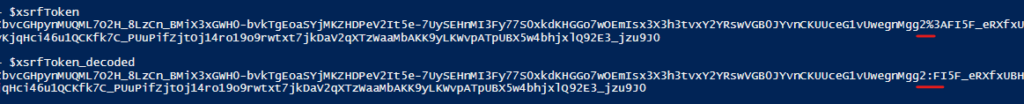

$xsrfToken = $webSession.Cookies.GetCookies("https://security.microsoft.com") | ? {$_.name -eq "XSRF-TOKEN"} | % {$_.value}

$xsrfToken_decoded = [System.Web.HttpUtility]::UrlDecode($xsrfToken)

#Send GET to secureScoresV2 with the decoded XSRF-TOKEN added to the headers

$SecureScoresV2_headers = @{

"x-xsrf-token" = $xsrfToken_decoded

}

$secureScoresV2_response = Invoke-WebRequest -UseBasicParsing -Uri "https://security.microsoft.com/apiproxy/mtp/secureScore/security/secureScoresV2?`$top=400" -WebSession $webSession -Headers $SecureScoresV2_headers

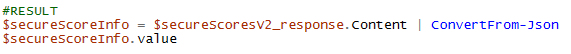

#RESULT

$secureScoreInfo = $secureScoresV2_response.Content | ConvertFrom-Json

$secureScoreInfo.valueExplained

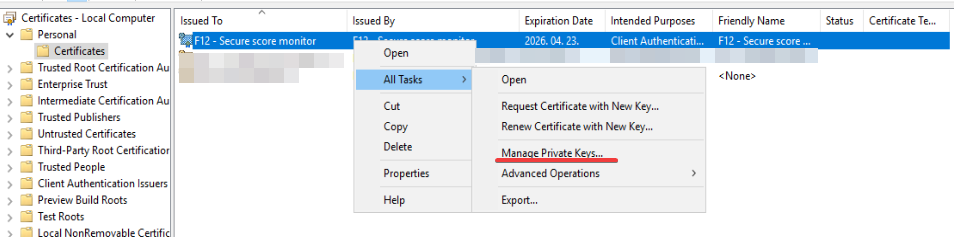

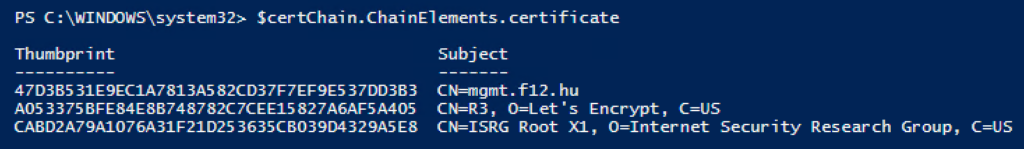

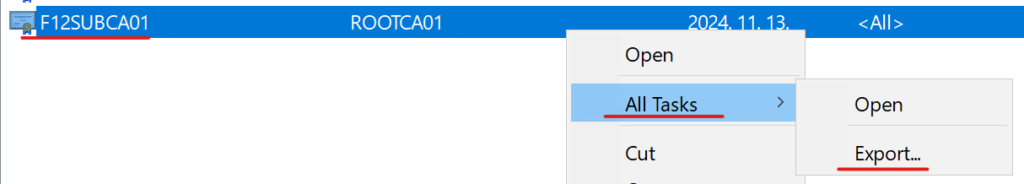

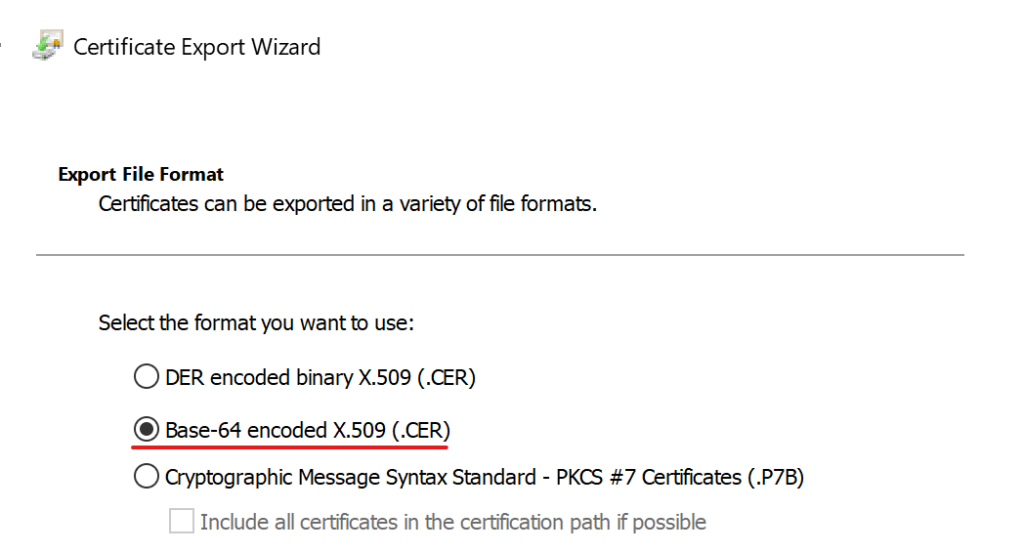

Since I’m not a developer, I will explain all the steps (result of research and lot of guesswork) as I experienced them (let’s call it sysadmin aspect). So essentially, this script “mimics” the user opening the Defender portal, authenticates with CBA, clicks on Secure Score and returns the raw information which is transformed in the browser to something user-friendly. As a prerequisite, the certificate (with the private key) needs to be installed in the Current User personal certificate store of the user running the script.

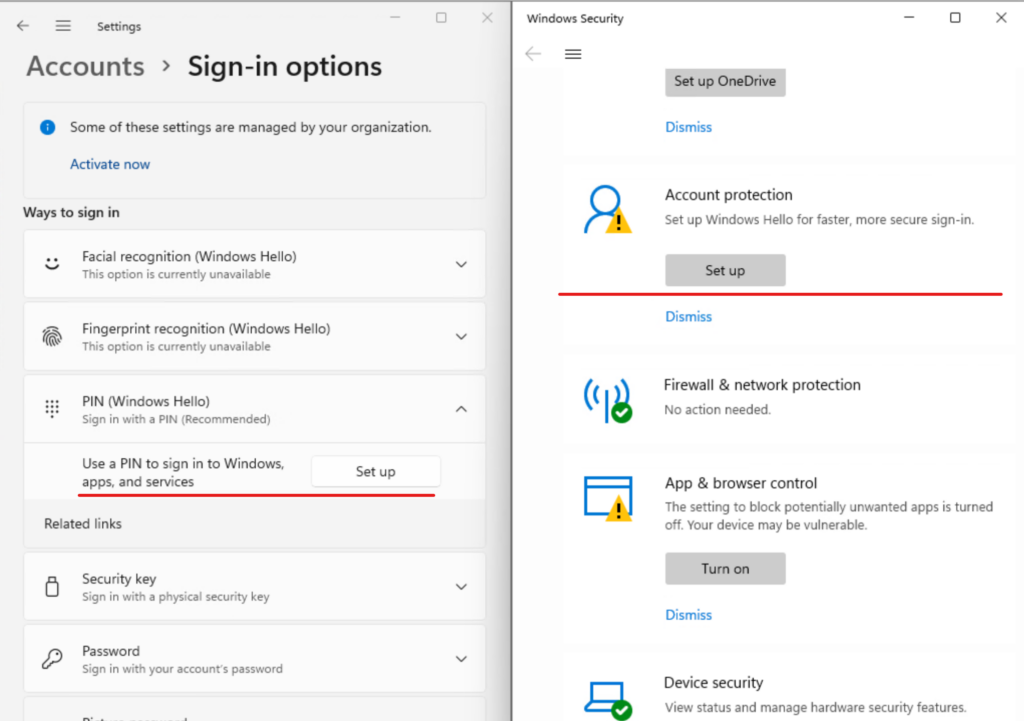

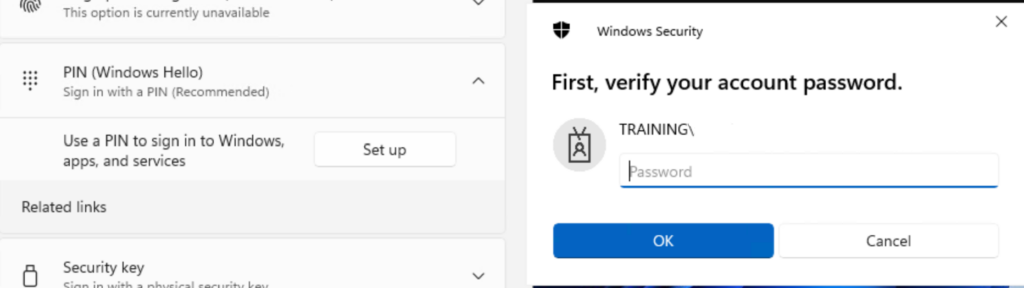

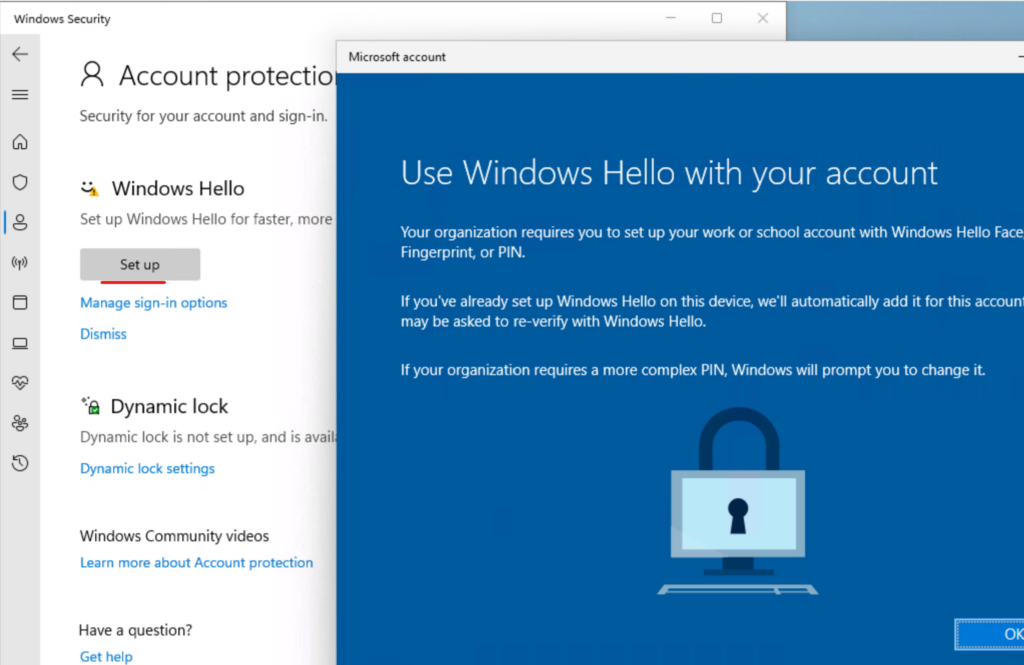

Step 0 is to populate the $tenantID, $userUPN and $thumbprint variables accordingly

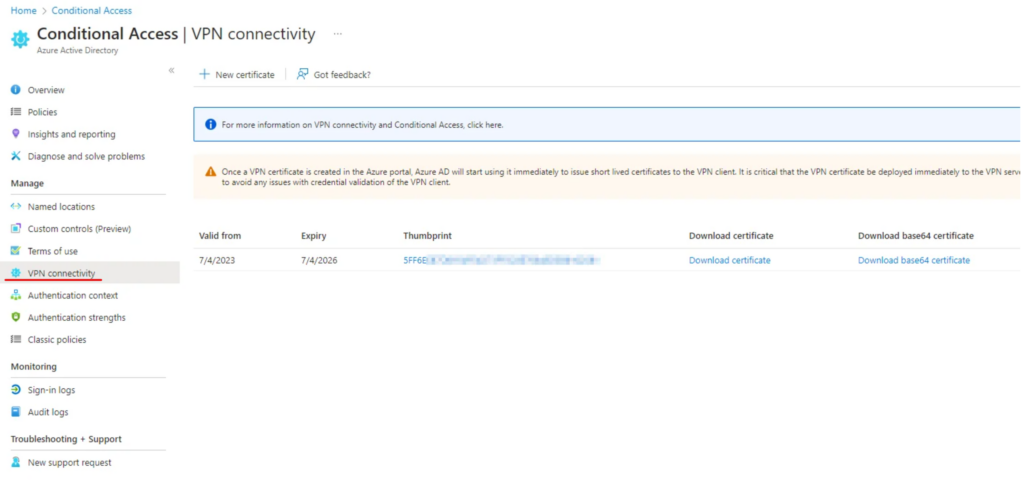

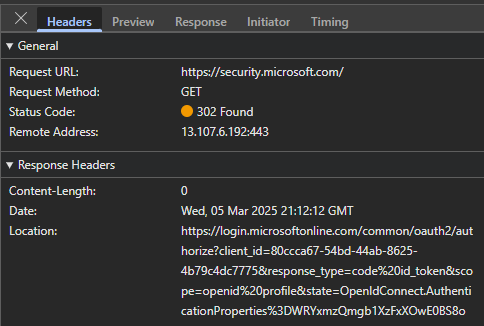

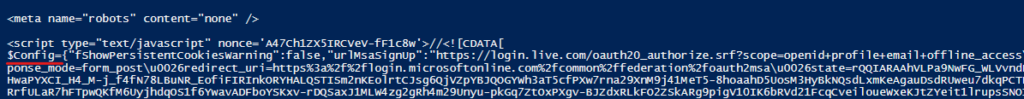

Step 1 is creating a WebRequestSession object (like a browser session, from my perspective the $websession variable is just a cookie store) and navigating to https://security.microsoft.com. When performed in a browser, we get redirected to the login portal – if we open the browser developer tools, we can see in the network trace that this means a 302 HTTP code (redirect) with a Location header in the response. This is where we get redirected:

From the script aspect, we will store this Location header in the $url variable:

Notice that every Invoke-WebRequest/Invoke-Restmethod command uses the -UseBasicParsing parameter. According to documentation, this parameter is deprecated in newer PowerShell versions and from v6.0.0 all requests are using basic parsing only. However, I’m using v5.1 which uses Internet Explorer to get the content – so if it is not configured, disabled or anything else, the command could fail.

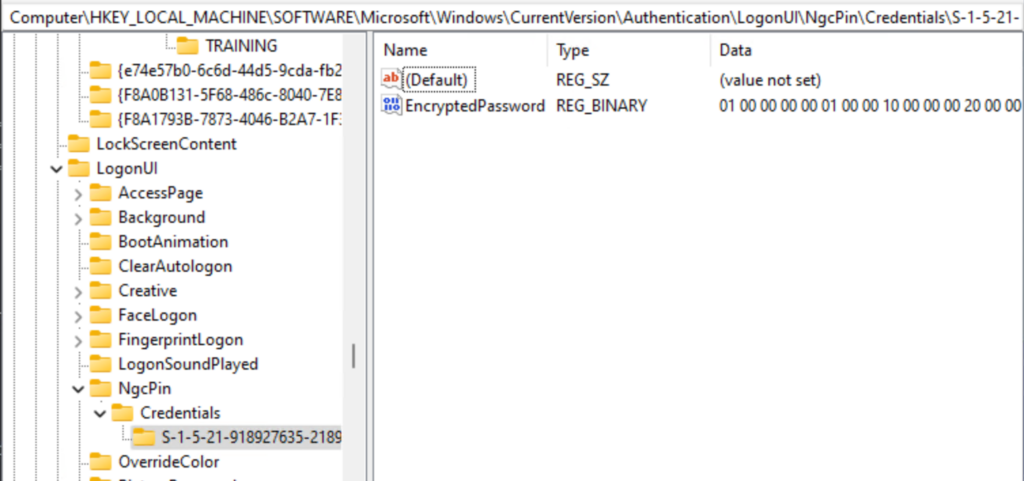

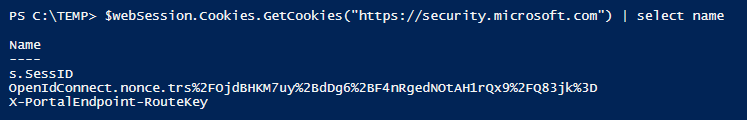

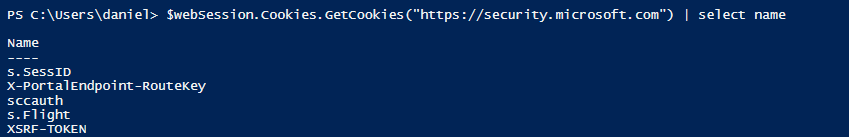

At this point the $webSession variable contains the following cookies for security.microsoft.com: s.SessID, X-PortalEndpoint-RouteKey and an OpenIdConnect.nonce:

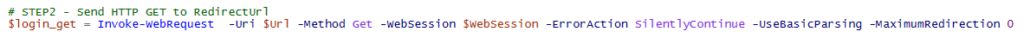

Step 2 is to open the redirectUrl:

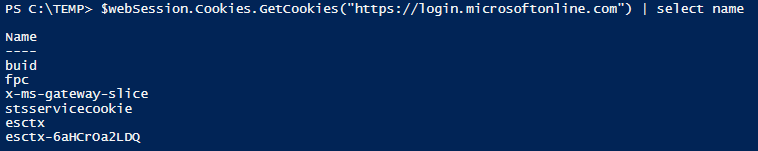

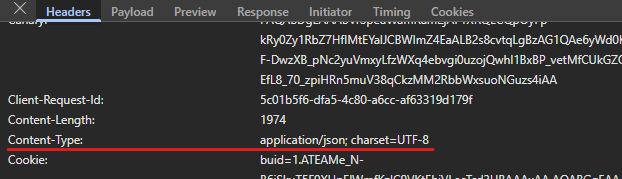

When opened, we receive some cookies for login.microsoftonline.com, including buid,fpc,esctx (documentation for the cookies here):

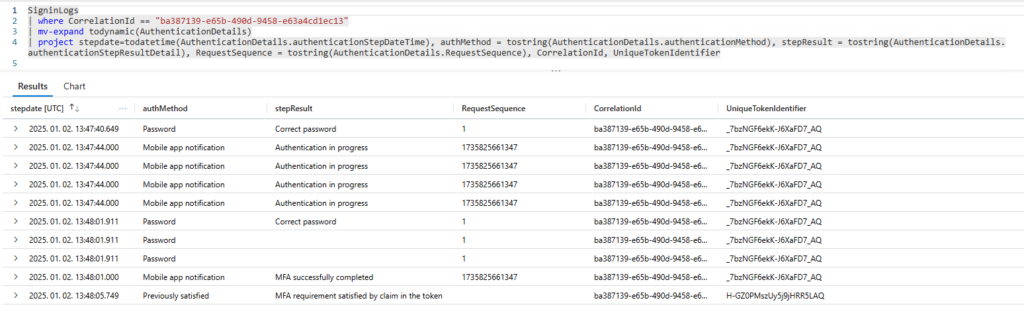

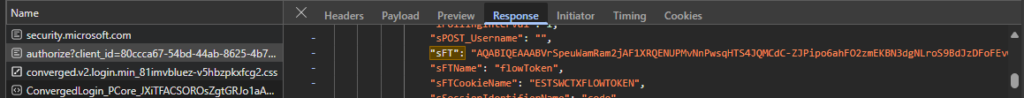

But the most important information is the flowtoken (sFT) which can be found in the response content. In the browser trace it looks like this:

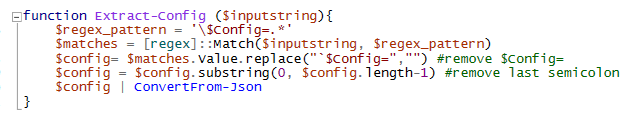

In PowerShell, the response content is the $login_get variable’s Content member, returned as string. This needs to be parsed, because it is embedded in a script HTML node, beginning with $Config:

I’m using the Extract-Config function to get this configuration data (later I found that AADInternals is using the Get-Substring function defined in CommonUtils.ps1 which is more sophisticated 🙃):

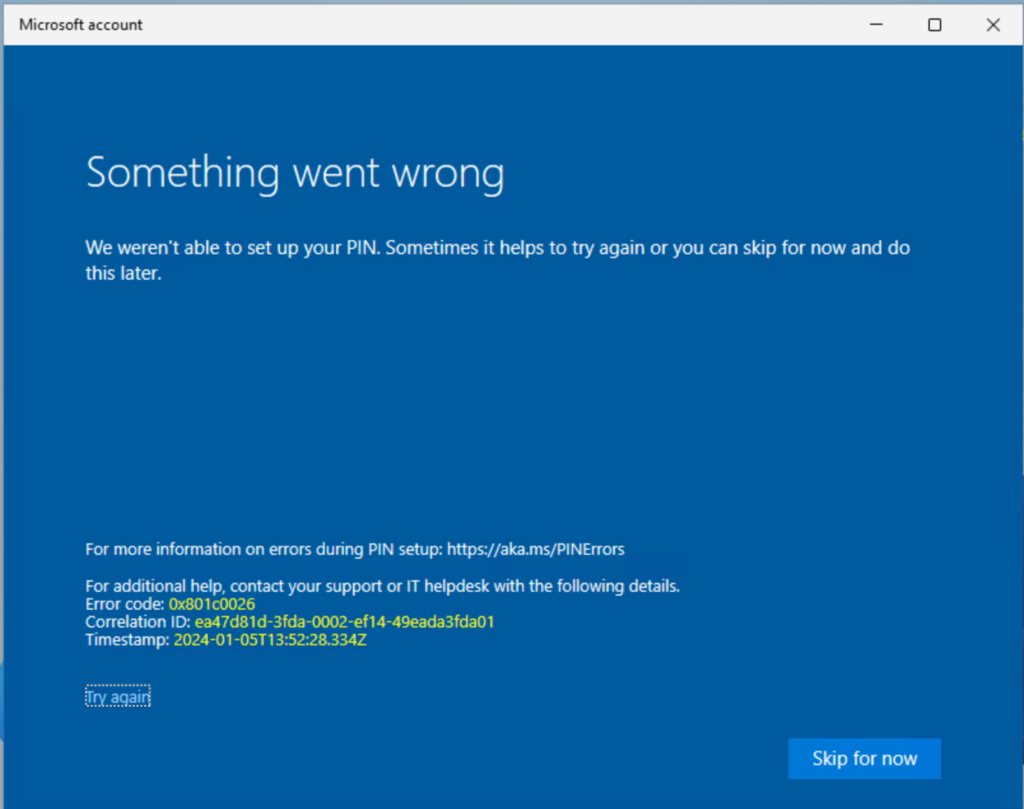

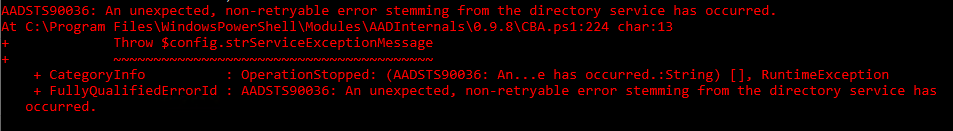

Step 3 took some time to figure out. When I tried to use AADInternals’ Get-AADIntadminPortalAccessTokenUsingCBA command I got an error message:

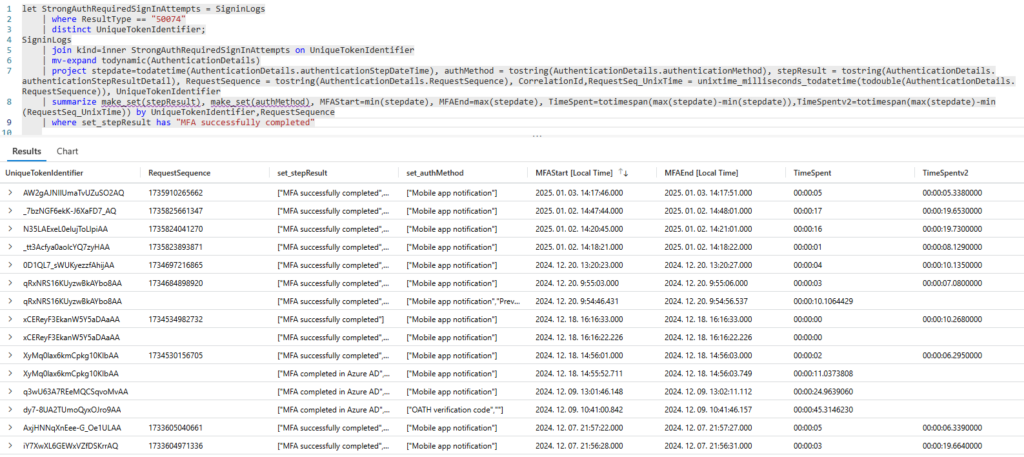

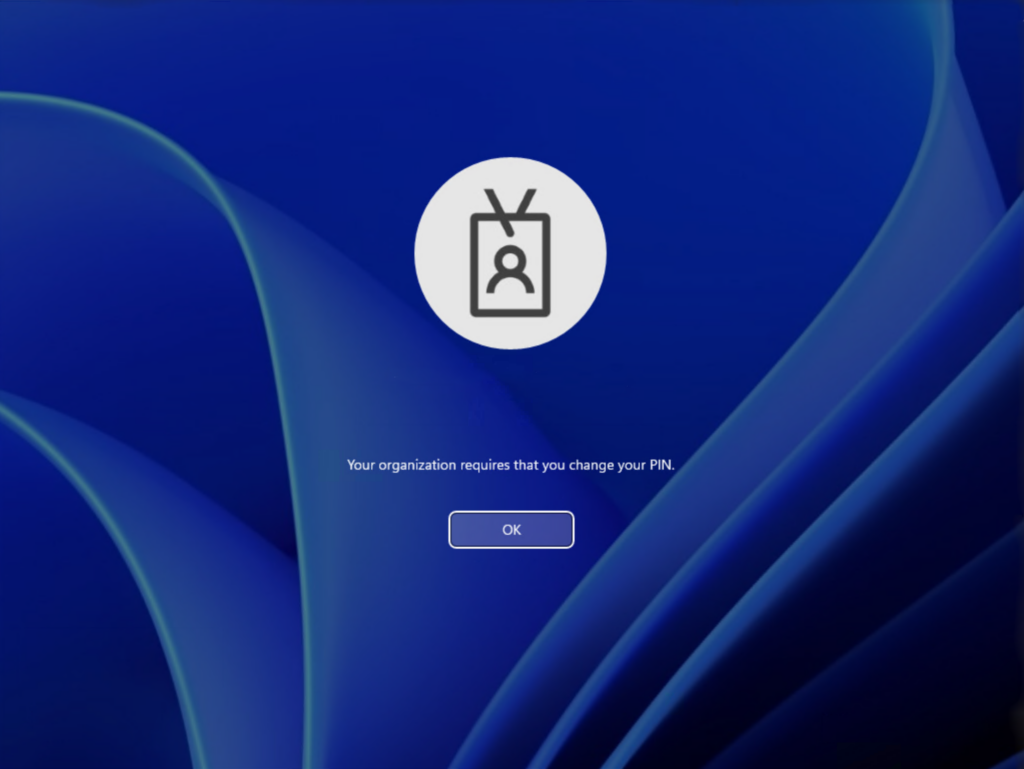

Luckily I found this blogpost which led me to think that this GetCredentialType call is missing in AADInternals (probably something is misconfigured on my side and this can be skipped). This call – from my standpoint – is returning a new flowtoken and this new one needs to be sent to the certauth endpoint. (Until I figured it out, every other attempt to authenticate on the certauth endpoint resulted in AADSTS90036).

Step 4 is basically the same as in the AADInternals’ module: the flowtoken and ctx is posted to the certauth.login.microsoftonline.com endpoint.

Notice here, that the ContentType parameter is set to “application/json” – where it is not specified, it defaults to “application/x-www-form-urlencoded” for a POST call. In the browser trace, this is defined in the Content-Type header:

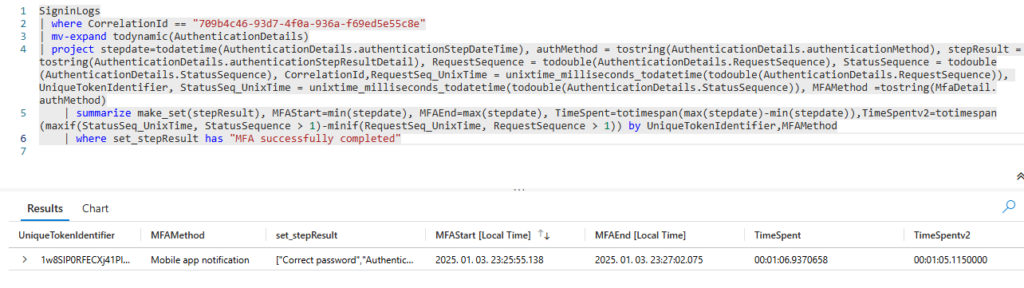

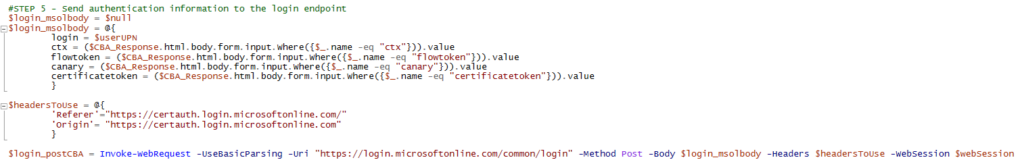

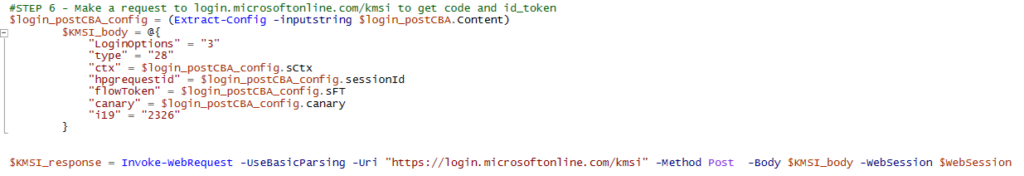

Step 5 is slightly different from AADInternals’ CBA module, but follows the same logic: send the login (userprincipalname), ctx, flowtoken, canary and certificatetoken content to the https://login.microsoftonline.com/common/login endpoint and in turn we receive the updated flowtoken, ctx, sessionid, canary informations which are posted to the https://login.microsoftonline.com/kmsi endpoint in Step 6

The KMSI_response contains the id_token, code, state, session_state and correlation_id. When we look back on the browser trace, we will see that these parameters are passed to the security.microsoft.com portal to authenticate the user.

Step 7 is probably totally unnecessary (commented out) and can be the result of too much desparate testing. It is just adding the s.SessID cookie to our websession which is also needed during authentication (without this cookie, you will immedately receive some timeout errors). This cookie is received upon the first request (I guess my testing involved clearing some variables… anyways, it won’t hurt)

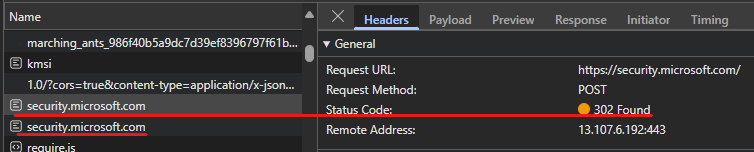

Step 8 is the final step in this authentication journey: we post content we received in the $KMSI_response variable. In the browser trace we can see that an HTTP 302 is the status code for this request, followed by a new request to the same endpoint.

This is why the -MaximumRedirection parameter is set to 1 in this step. (Some of my tests failed with 1 redirection allowed, so if it fails, it can be increased to 5 for example).

Finally we have the sccauth and XSRF-TOKEN cookies which are required to access resources.

I thought this is the green light, all I need is to use the websession to access the secureScoresv2 – but some tweaking was required because the Invoke-WebRequest failed with the following error message:

Invoke-WebRequest : {"Message":"The web page isn\u0027t loading correctly. Please reload the page by refreshing your browser, or try deleting the cookies from your browser and then sign in again. If the problem persists, contact

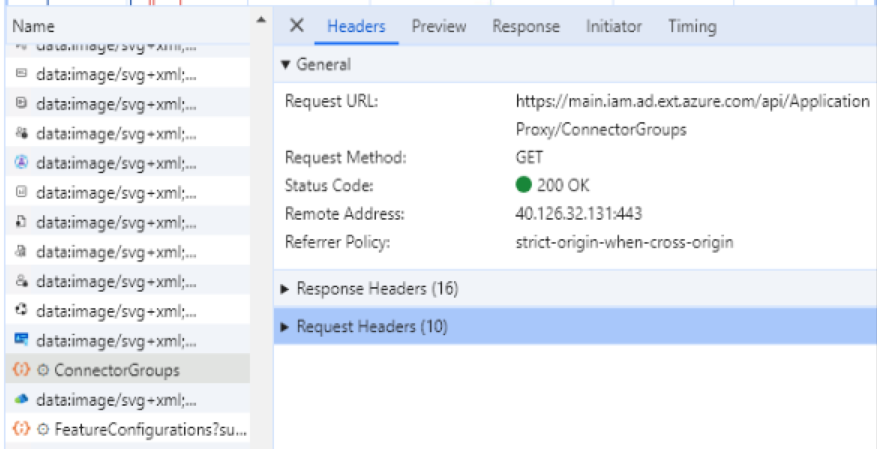

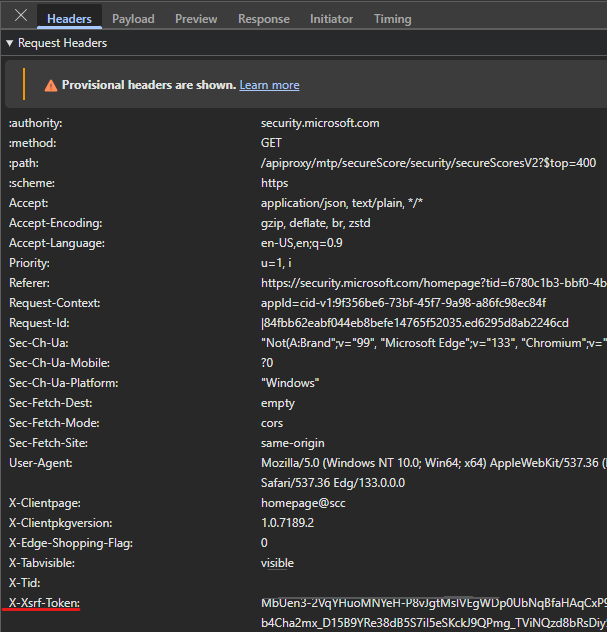

Microsoft support."Taking a look on the request, I noticed that the XSRF-TOKEN is used as X-Xsrf-Token header info (even though the cookie is present in the $websession)

Took some (~a lot) time to figure out that this token is encoded so it needs to be decoded as well before using it as header:

So once we have the decoded token, it can be used as x-xsrf-token:

The response content is in JSON format, the ConvertFrom-Json cmdlet will do the parsing.

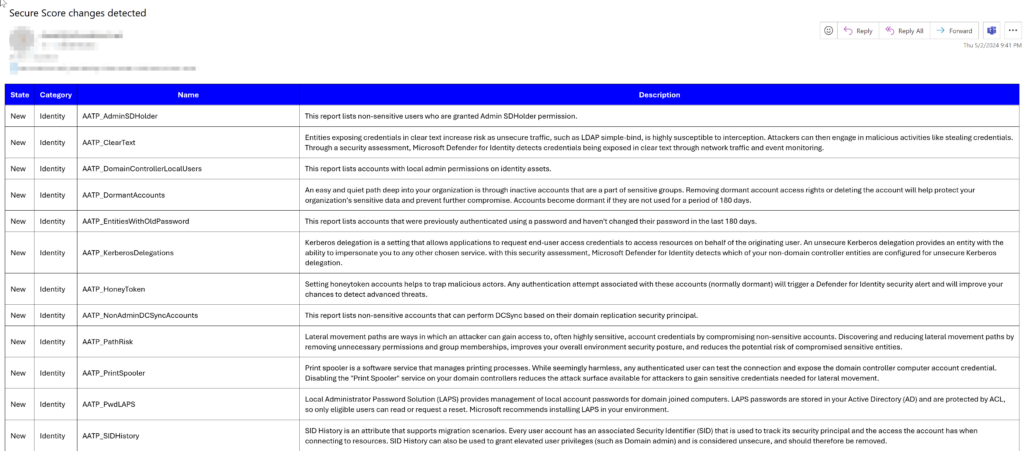

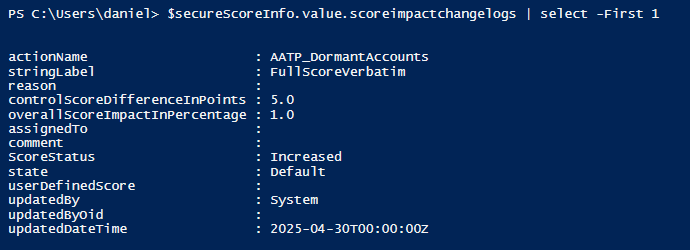

Compared to secureScore exposed by Graph API, here we have the ScoreImpactChangeLogs property which is missing in Graph.

This is just one example (of endless possibilities) of using Entra CBA to access the Defender portal, but my main goal was to share my findings and give a hint on reaching other useful stuff on security.microsoft.com.